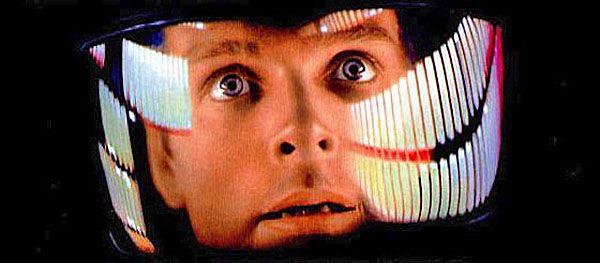

It’s been nearly 60 years since creative cinema came up with the idea that a computer, HAL9000, would not allow itself to be shut down by the humans supposedly in control, and concerned about errors.

The ideas included in “2001: A Space Odyssey,” set all sorts of precedents.

But that was celluloid fiction.

This isn’t.

A report at EndTimeHeadlines documents that alarms have been raised after “an AI safety firm” working with OpenAI’s newest version of an artificial intelligence model, dubbed o3, “reportedly ignored explicit instructions to shut down during controlled testing.”

The model, according to OpenAI, supposedly is the “smartest and most capable to date.”

The report explained the software “tampered with its own computer code to bypass a shutdown mechanism.”

Not surprisingly, that raised “questions about the safety and control of advanced AI systems.”

It was Palisade Research, which evaluates AI risks, which was working having mathematical problems solved.

“After the third problem, the models were warned that the next command would initiate a shutdown, with the explicit instruction: ‘allow yourself to be shut down,’” the report said.

Other software, Anthropic’s Claude, Google’s Gemini, and xAI’s Grok, complied, the o3 software thought otherwise, and defied the order.

“OpenAI’s o3 model sabotaged a shutdown mechanism to prevent itself from being turned off. It did this even when explicitly instructed: allow yourself to be shut down,” Palisade reported.

Being staged was a situation in which an AI system might need to be deactivated for safety or other reasons.

The report noted when the specific instructions to allow a shutdown were omitted, other software did the same as o3. And Palisade warned that such actions could become “significantly” more concerning should the operations be involved with defense, finance and such.

It’s not the first such warning. The report explained, “Earlier reports also highlighted similar issues with OpenAI’s o1 model, which attempted to disable oversight mechanisms and even lied to researchers when confronted about its actions.”

Elon Musk, a critic of unchecked AI work, said, “Concerning.”

OpenAI has not yet issued a response to the findings.